AI Code Review with Claude Code: Breaking the Bottleneck

When code review becomes a bottleneck to the release cycle

You know that feeling? N developers are waiting for one person to review their code. We all know this anti-pattern — everyone on the team should be doing code reviews — but somehow you still end up with one reviewer handling everything.

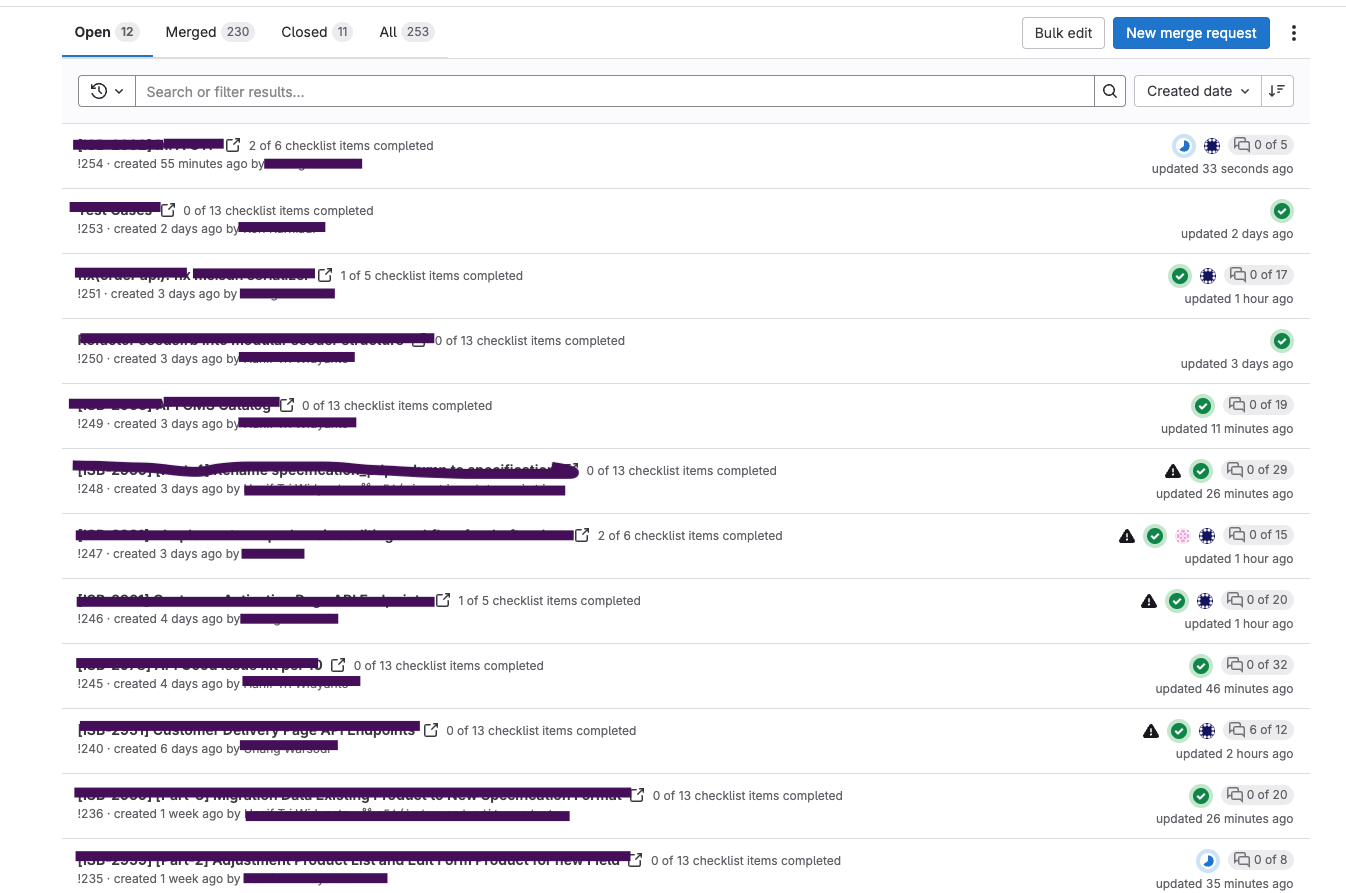

A small bug fix sits in merge request purgatory for two days. A new feature takes three days just to get reviewed — not built, just reviewed. That’s the moment you realize code review became your team’s bottleneck, not your quality gate.

This is where we were. One code reviewer. Multiple PRs. Days of waiting.

The Current Code Review Process (And Why It Fails)

Our review pipeline looked straightforward enough on paper:

First, CI catches the obvious stuff — code analysis, standards, unit tests, integration tests, coverage reports. That part is automated. Then comes the human judgment. One person checks if the business logic makes sense, if the implementation matches the architecture, and whether new files have proper test coverage. They leave comments. Developers fix things. Then someone tests it locally against the JIRA ticket to make sure it works as expected. Only then does it move to UAT.

The problem isn’t the process itself. The problem is the bottleneck. One reviewer. Multiple PRs. Multiple days.

The Real Cost of Slow Code Reviews

When a small fix waits a day for review, it’s not just about that one day. It’s context switching. Its developers are sitting idle or jumping to other tasks. It’s UAT getting delayed. It features shipping slower than it should.

We needed to speed this up without sacrificing quality. That’s where Claude Code came in.

Claude Code as Your AI Code Reviewer: The Full Workflow

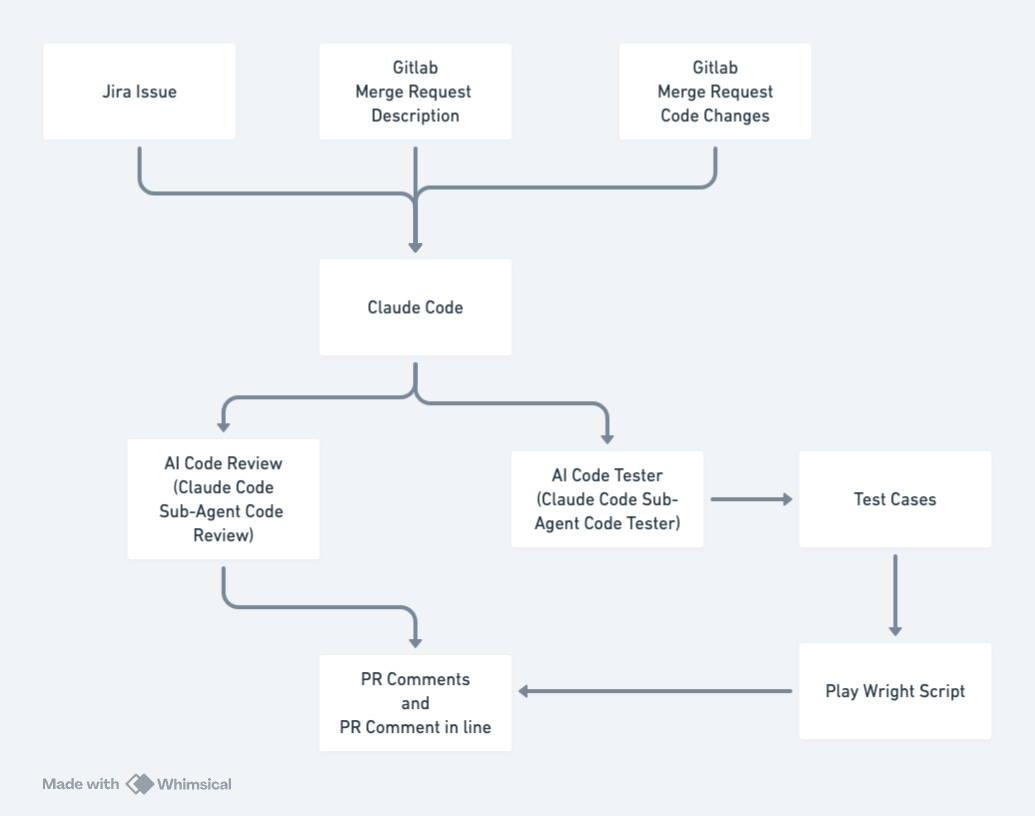

Here’s the PoC workflow we wanted to build:

JIRA Issue → Claude Code pulls the ticket context to understand what the developer was supposed to build

GitLab Merge Request Description → Claude reads the PR description for additional context

GitLab Merge Request Code Changes → Claude analyzes the actual code modifications

Claude then splits the work into two parallel tracks:

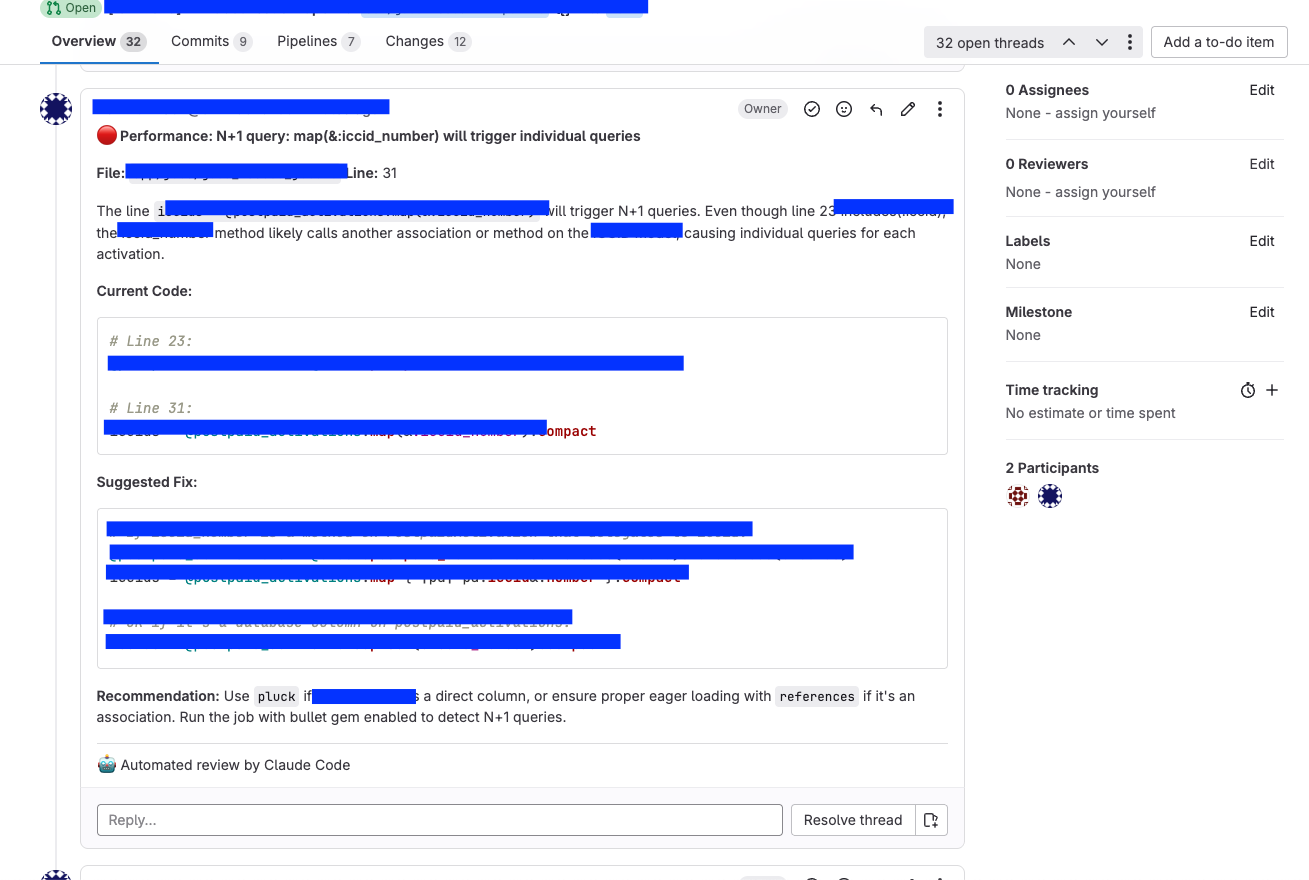

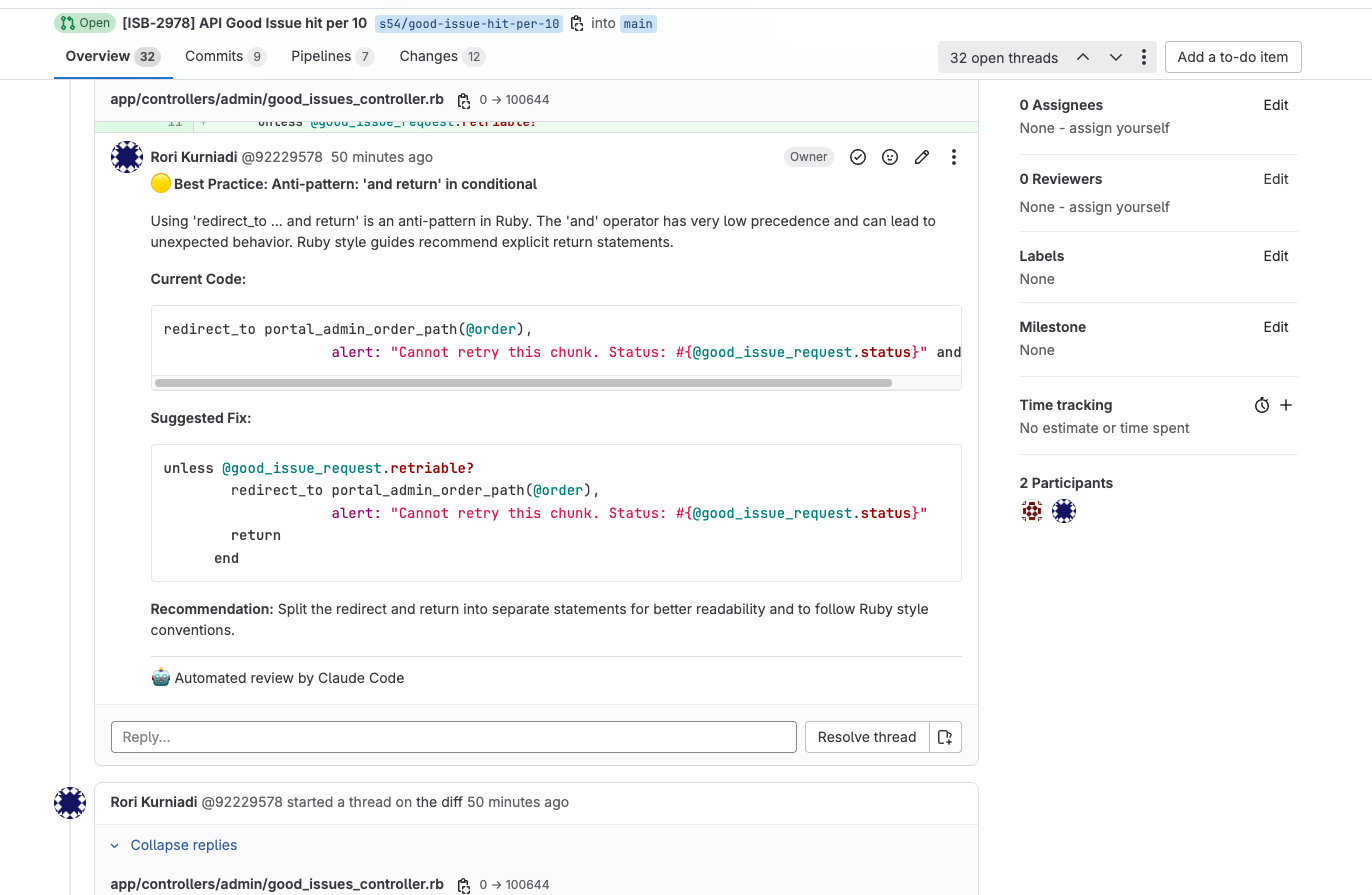

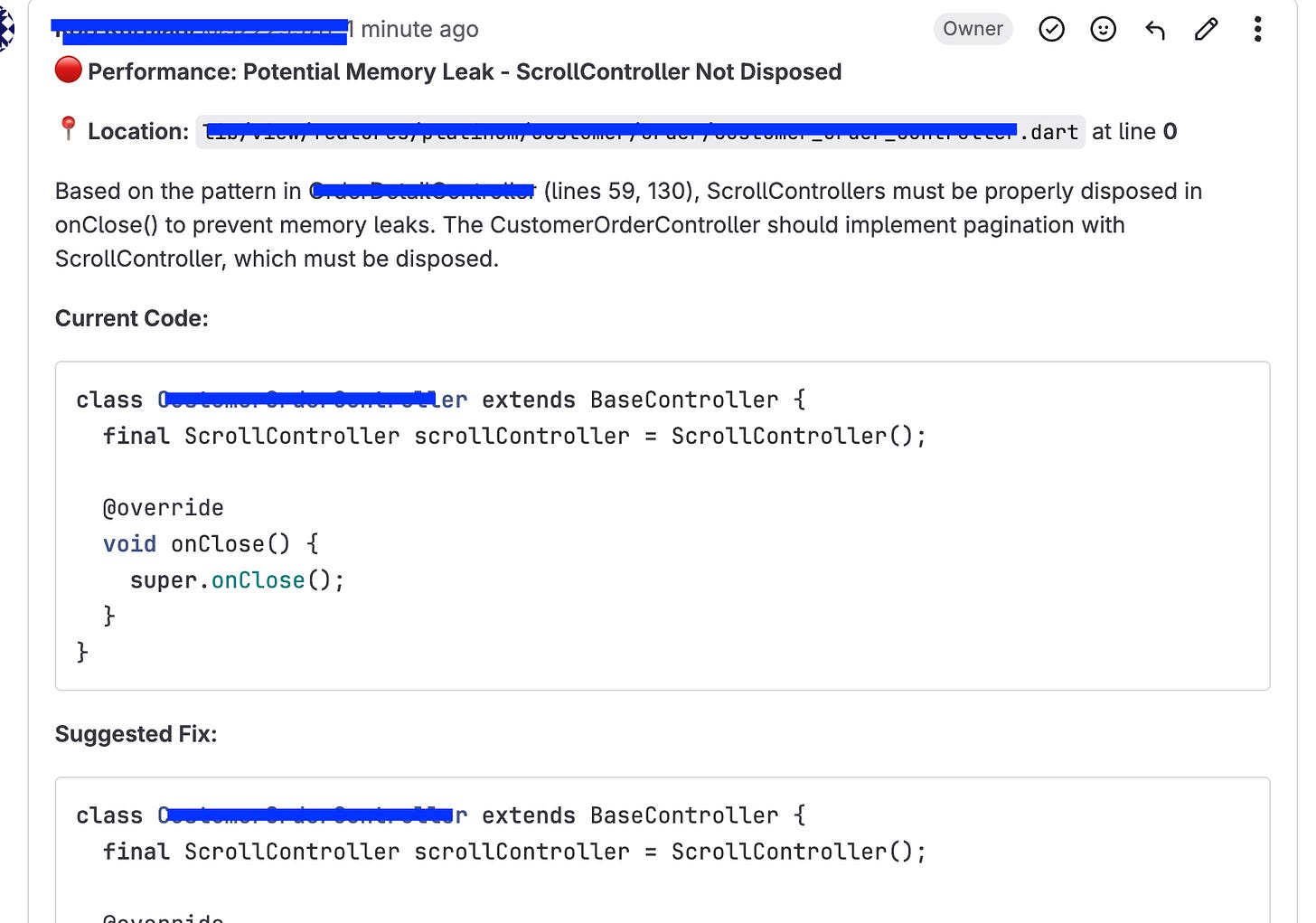

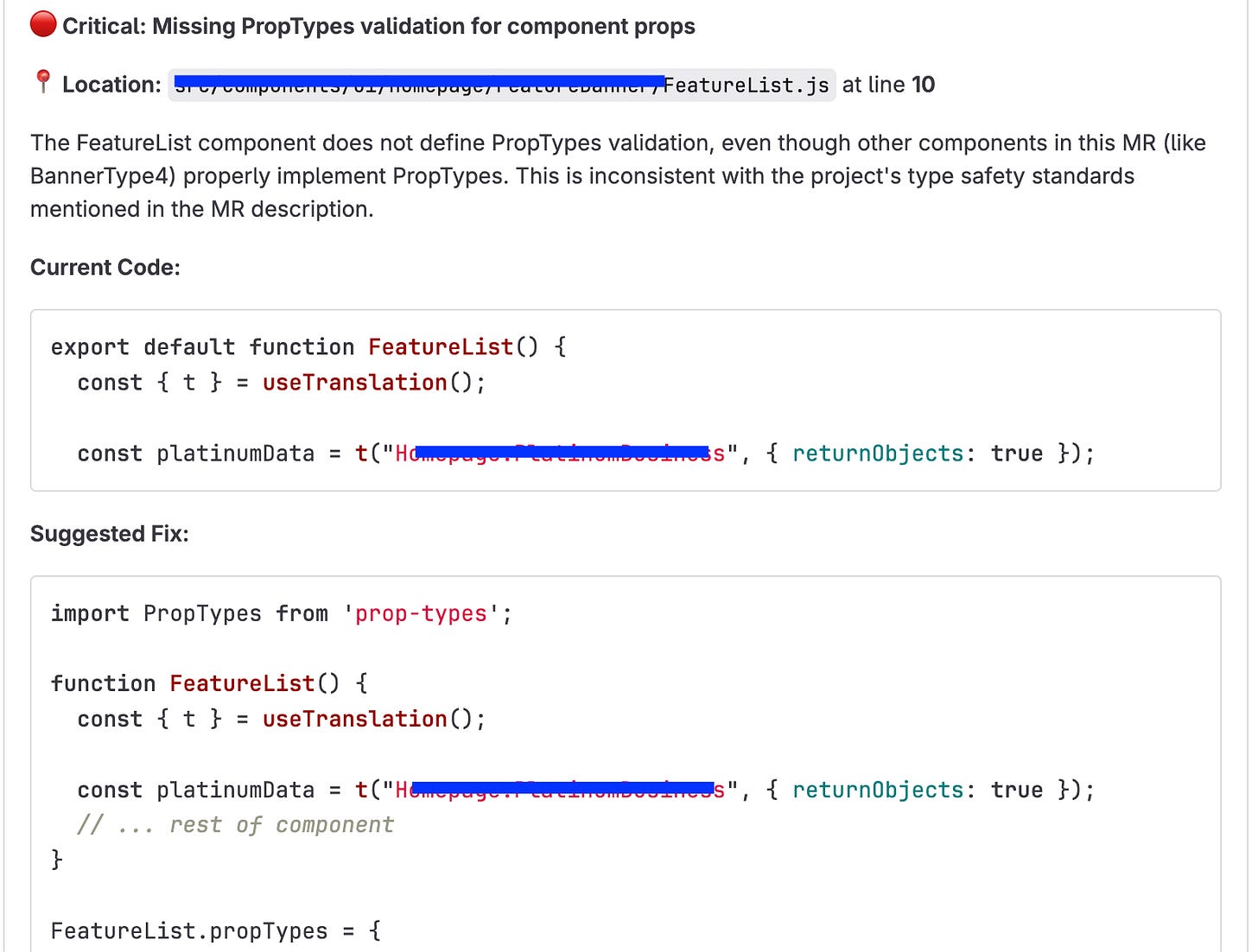

AI Code Review (Sub-Agent) → Reviews code quality, standards, patterns, and test coverage

AI Code Tester (Sub-Agent) → Generates test cases and validates behavior

PR Comments → Post comments both as inline comments for specific line issues and general feedback on the MR

The developer gets detailed feedback without confusion about what needs to be fixed.

Setting Up MCP: The Critical Part

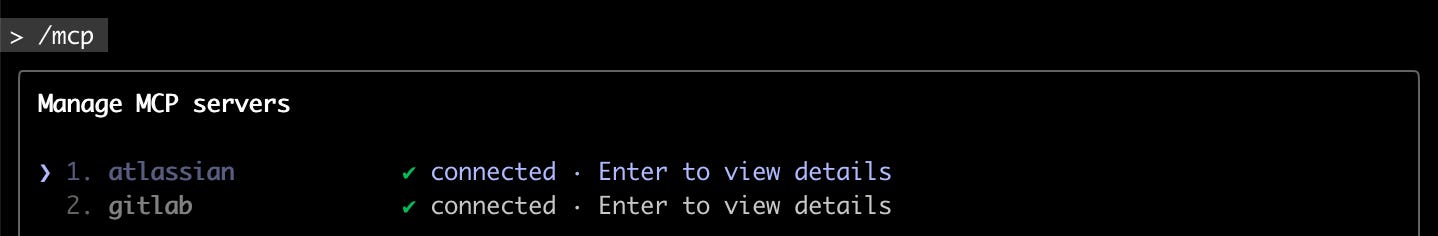

The critical part for success is setting up MCP (Model Context Protocol) correctly. Atlassian already has an official MCP, which made JIRA integration straightforward. But for GitLab? That was trickier — especially since we’re using GitLab Self-Hosted on a private network.

After searching, I found a working GitLab MCP. It took 2 days to set it up and test various prompts to ensure MCP functionality worked correctly — pulling MR details, fetching JIRA issues, and verifying the connection held up under real workloads.

Creating the Claude Code Fullstack Code Reviewer Sub-Agent

After MCP was working, I created a Claude Code sub-agent called Fullstack Code Reviewer, which focuses on code review for our tech stack (Ruby on Rails). It checks for:

Rails conventions and best practices

Logic extraction to service objects

Missing unit tests or integration tests

OWASP security vulnerabilities

Database performance issues

Documentation and README updates

Seed data changes for local development

And more, depending on what the PR touched.

Building the Custom Command: The Missing Piece

Before this, the workflow required manual steps:

Check the MCP connection to GitLab and JIRA

Pull JIRA issue details

Pull MR details

Manually assign Fullstack Code Reviewer to review

Post comments to GitLab

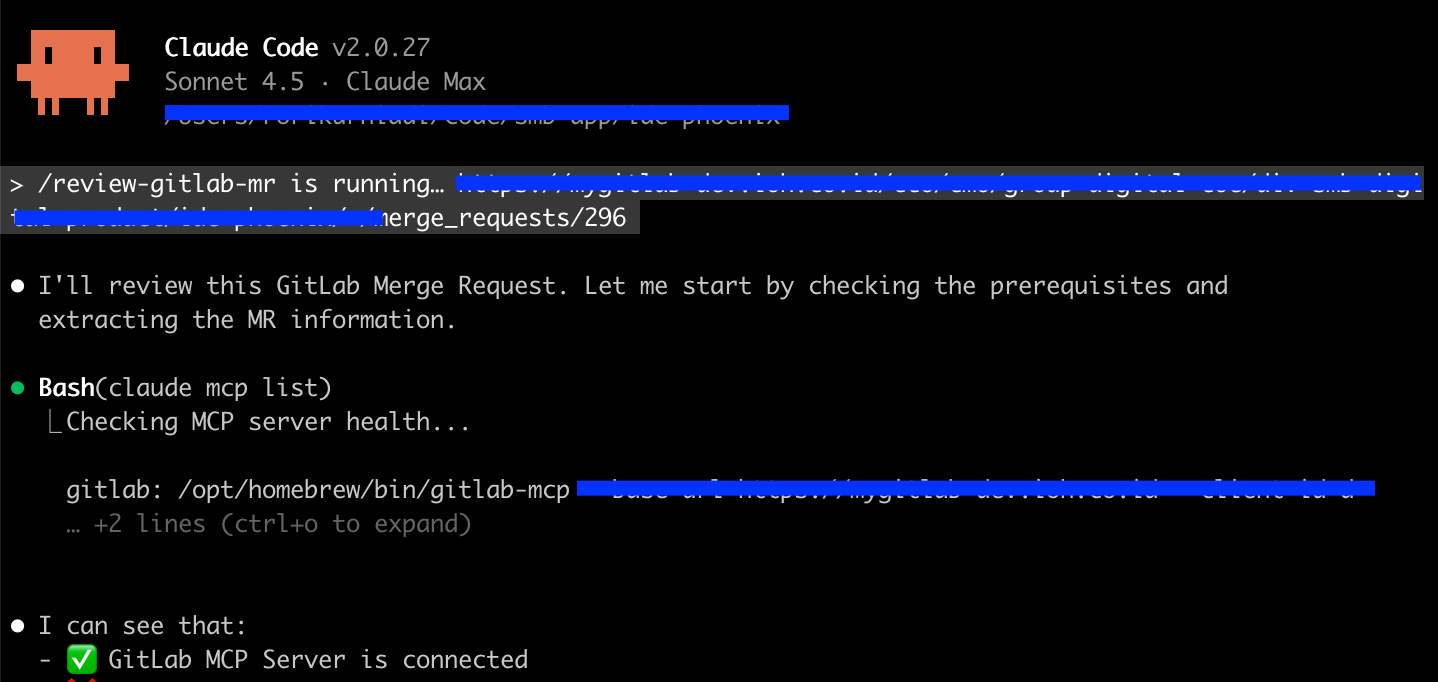

I realized Claude Code could create a custom command to automate all of this. After researching Claude Code capabilities, I wrapped everything into a single command:

claude review-gitlab-mr <GITLAB_MR_ID> <JIRA_ISSUE_ID>That’s it. One command. Everything else happens automatically. 🥁

Now Claude Code handles the first layer of review:

Checks code against your standards and conventions (not just linting, but actual architectural patterns)

Validates that tests exist and cover the changes

Reads the JIRA ticket to understand the business context

Flags potential issues before a human even looks at it

The Challenges We Hit (And How We Solved Them)

Like any development work, this wasn’t smooth sailing. Here’s what we learned:

Challenge 1: Multiple Tech Stacks

We don’t just have Rails. We’re running Rails backends, Next.js frontends, and Flutter mobile apps. Each needs different review rules, different test patterns, and different conventions.

Solution: We adjusted the Claude Code custom command to support tech stack classification and created separate sub-agents for each stack — Flutter Code Reviewer, Next.js Code Reviewer, etc. The dispatcher command detects which stack the PR touches and routes to the appropriate reviewer.

Challenge 2: Multiple Review Iterations

Initially, the system didn’t support reviewing the second, third, or subsequent MR iterations. It would treat each new commit as a fresh PR and re-review everything.

Solution: We adjusted the custom command to check existing threads/discussions, mark resolved threads as done, and only review new changes instead of re-reviewing the entire PR.

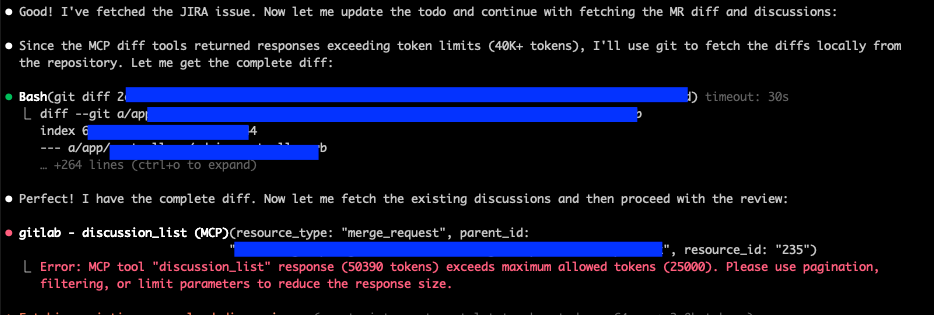

Challenge 3: Huge MCP Token Usage

Pulling data via MCP consumed significant tokens because without MCP, the code review would be based on invalid or incomplete data. The system needed to pull full context every time.

Solution: We set export MAX_MCP_OUTPUT_TOKENS=XXXXX to cap token usage while still getting enough context. We also optimized which data we actually needed to pull, avoiding unnecessary full-file reads when only specific sections changed.

export MAX_MCP_OUTPUT_TOKENS=XXXXXWhat Actually Changed in Our Team

Reviews that used to take 2–3 days now take a few hours. We went from one bottleneck to a faster release cycle where AI handles the grunt work and humans handle the thinking.

More importantly: developers don’t feel blocked. Small fixes move fast. Quality didn’t drop — it actually improved because everything gets consistent, tech-stack-aware checks before human review.

The multi-stack setup also means we’re not maintaining separate review processes for different projects. Everything flows through the same command, but each project gets appropriate scrutiny.

What the human reviewer actually does now:

Makes judgment calls about business logic

Reviews architectural decisions

Approves with confidence because tests are solid and standards are met

Focuses on the hard problems, not the mechanical checks

The Honest Part

This isn’t magic. Claude Code isn’t replacing code review. It’s doing the parts that don’t require judgment — the checklist stuff, the pattern matching, the test verification. A human still needs to understand if the feature makes sense and if the implementation is the right approach.

But that human doesn’t need to waste time on mechanical checks anymore. And they don’t need to be a Rails expert, a Next.js expert, and a Flutter expert all at once.

How to Start: Step-by-Step Implementation

If your team is stuck in the same bottleneck, here’s the path:

Start with one tech stack (Rails, Next.js, or Flutter — whichever is your primary)

Understand and work with MCP (this is critical — allocate 1–2 days here)

Build the primary sub-agent for your stack (1 day)

Create the custom command that wraps all your prompts (half day)

Test with a few PRs to dial in the rules (1–2 days)

Once it’s working, add sub-agents for your other stacks (2 hours each)

The initial setup takes about a week of focused work. Adding a new tech stack takes a couple hours. The payoff is getting days back every sprint, across all your projects.

What’s Next: Our Roadmap

This is working so well that we’re already planning the next phase:

Hook it into GitLab webhooks so reviews run automatically when MRs are created

Create an Automated Environment so the test by QA will be isolated

AI QA Automation that builds end-to-end tests from JIRA acceptance criteria

AI Release Manager that prepares deployment and release notes

Summary: Code Reviews Don’t Have to Be Slow

Code reviews don’t have to be slow. They don’t have to be a single person’s full-time job. They don’t have to ignore the fact that different tech stacks have different rules.

AI handles the boring parts. Different reviewers understand different stacks. Humans make the real decisions. Everything moves faster.

If you’re sitting in a two-to-four-day code review queue with N developers building in Rails, Next.js, and Flutter, you already know the problem. Claude Code and smart routing is a solid answer.

The real win? Your team ships faster, quality improves, and developers don’t feel blocked by reviews. That’s worth the setup time.

“Want the complete guide? I’ve documented the entire process—MCP setup for GitHub/GitLab, prompt templates, tech-stack-specific agents (Rails/Next.js/Flutter), troubleshooting, and real code from production. The complete implementation guide is available here.”

You've perfecly articulated the code review bottleneck many teams face. I'm curious if AI for initial PR checks might just shift the bottleneck or truly solve it at the root?